Are AI Companion Apps Safe? Privacy & Risks (What You Need to Know)

AI companions whisper your deepest desires while hiding dangerous secrets. Uncover the hard truth about who - or what - is really listening to your most private digital moments.

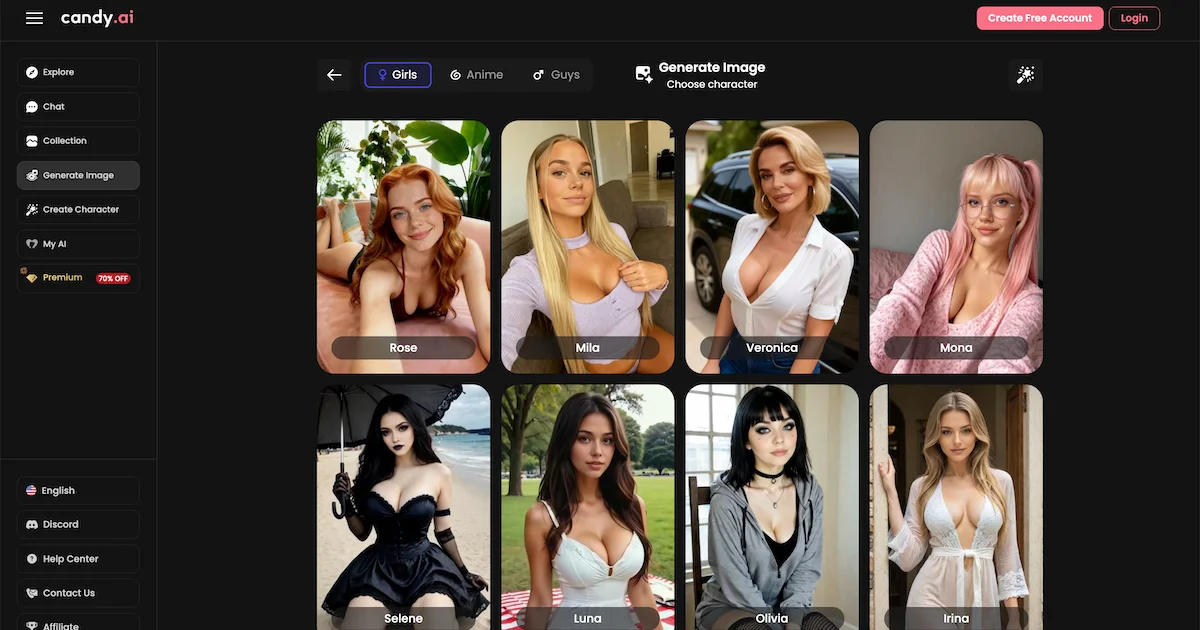

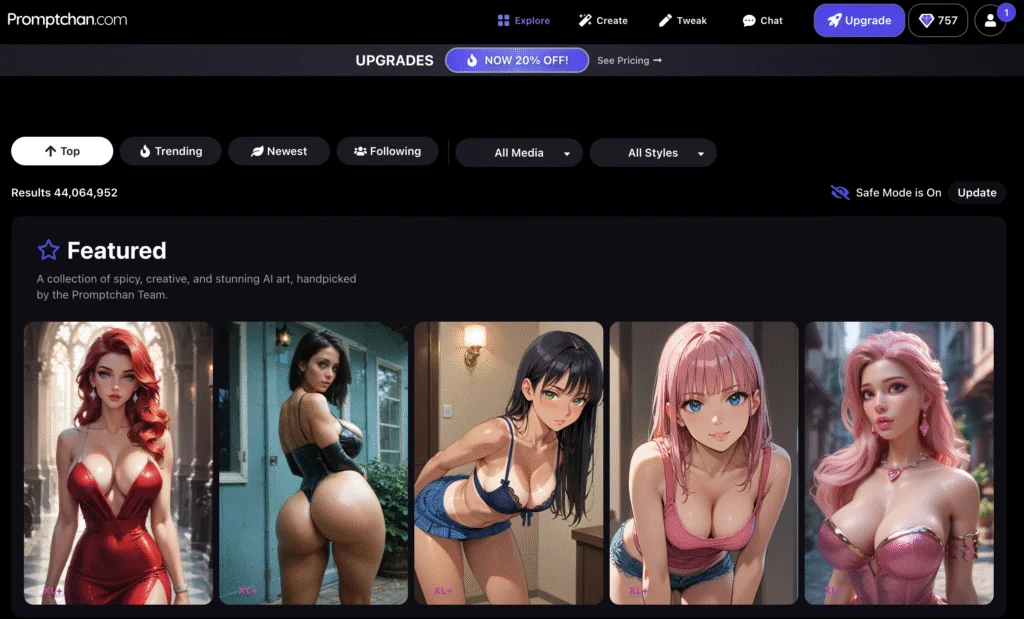

One of the most interesting recent developments in the world of technology is AI companion apps.

Not because they are the smarter AI tools - they are not.

But since they are the first mainstream category of AI that is built around something that is patently human:

connection.

You are not merely trying to get a piece of AI to write an e-mail.

You’re telling it:

how you feel

what you’re worried about

what you’re insecure about

what you want from life

what you would love somebody to say to you

And that is where the safety and privacy question comes in.

Because AI companion apps aren’t like normal apps.

They’re not Spotify. They’re not a calculator.

They are more of a talking-back-diary.

So yes - AI companion apps can be safe.

However, there is one condition: you should use them properly, select the right applications, and be aware of the dangers.

This guide explains:

the meaning of safe on AI companions.

where they gather their data of what kind.

where privacy risks are a source of concern.

which red flags to be on the lookout.

and how to get advantageous of them and not kill the fun.

Let’s get into it.

Quick Answer: Are AI Companion Apps Safe?

✅ Mostly safe for casual use

If you use AI companions for:

light conversation

fun roleplay (SFW)

journaling prompts

motivation / check-ins

…then most mainstream apps are “safe enough” for normal use.

⚠️ Risky if you overshare personal information

They become risky when users share:

identifying personal details

highly sensitive emotional information

sexual content or explicit chat logs

mental health crisis details

location/workplace info

financial data

The biggest danger isn’t that the AI “knows too much.”

It’s that a company now stores a detailed emotional profile of you.

What Does “Safe” Even Mean Here?

When people ask “Is it safe?” they usually mean:

1) Privacy

Does the app collect my personal data?

Is it stored securely?

Is it shared with third parties?

2) Security

Can someone hack my account?

Can chats leak?

Is payment data protected?

3) Emotional safety

Can this app manipulate my feelings?

Can it increase dependency?

Does it blur boundaries in unhealthy ways?

4) Content safety (especially for younger audiences)

Are there age gates?

Is moderation strong?

Does it prevent inappropriate interactions?

For most users, privacy + emotional safety are the big two.

Why AI Companions Are More Sensitive Than Other AI Tools

Here’s the thing:

Most people are not emotionally attached to their productivity chatbot.

But AI companion apps are designed to:

build continuity

remember details about you

feel affectionate/supportive

create habits (“daily check-in”)

This leads users to share things like:

relationship struggles

shame and insecurities

loneliness

fantasies

anxieties

self-image issues

personal history

Which means:

AI companion apps handle the most sensitive data category of all: emotional truth.

If your messages leaked, it wouldn’t be “oops embarrassing.”

It could be genuinely devastating.

So we treat AI companions differently.

What Data Do AI Companion Apps Collect?

Let’s break down what companion apps typically collect, in plain English.

1) Account data

email address / login

username

device identifiers

purchase history

2) Conversation data (the big one)

This includes:

your messages

AI responses

attachments (if any)

timestamps

topics you discuss

This can reveal:

mental health patterns

relationship status

intimacy preferences

emotional triggers

Even if you never “tell” the app your name, your chat logs can still identify you over time.

3) Usage behavior

Apps can track:

how often you chat

how long sessions last

what features you use

what prompts you respond to most

This helps them optimize for retention.

4) Voice / audio data (if voice chat exists)

Some companion apps offer voice calls.

Depending on the app, they may store:

voice recordings

transcripts

tone analysis (rare, but possible)

This is not automatically bad — but you should know what’s being saved.

5) Images (if images are supported)

If the app supports sending images:

images may be stored

metadata could be stored

images may be used for “improving the model” depending on terms

The 7 Biggest Privacy Risks (Realistic, Not Paranoid)

Let’s be practical. These are the real risks to understand.

Risk #1: Your chats may be used for training or improvement

Many apps include language like:

“We may use data to improve our services.”

Sometimes it means:

using chat data to train models

using it for internal evaluation

using it in anonymized datasets

Even “anonymized” doesn’t always mean safe.

People can sometimes be re-identified through patterns.

✅ What you want:

clear opt-out

“we do not use your private chats for training”

deletion options

Risk #2: Third-party sharing (analytics + ads)

Even if an app doesn’t “sell” your chats, it may share:

behavior data

device data

attribution data

with third-party services such as:

analytics platforms

marketing/attribution tools

ad networks

That’s common in tech — but for companion apps it’s much more sensitive because it reveals patterns like:

“This person uses a romance companion app every night at 2AM.”

Even without message content, that’s extremely personal.

✅ What you want:

minimal trackers

privacy-friendly analytics

transparent third-party list

Risk #3: Data breaches / leaks

Any company can get hacked.

If a music app leaks your playlist: mildly embarrassing.

If an AI companion app leaks your messages: catastrophic.

Companion apps create a single, juicy target:

a database of emotional confessions and intimate chats

✅ What you want:

strong account security

2FA

reputable company

good track record

Risk #4: Weak deletion controls

Some apps say you can delete chats… but still retain backups.

Or you delete a message thread, but:

support staff can still access it

backups retain it for long periods

✅ What you want:

clear “delete conversation data”

“delete account” option

explanation of retention periods

Risk #5: “Memory” features become a privacy risk

Memory is what makes companions feel personal.

But memory is also:

long-term stored personal data

If the app remembers:

your relationship status

your insecurities

your routines

your desires

That becomes extremely sensitive.

✅ Best practice:

Use memory features, but don’t store identifying info.

Risk #6: Emotional profiling

Even if an app never stores your name…

your writing style + patterns can reveal:

mental state

mood cycles

dependency behavior

vulnerability moments

And companion apps want to understand that — because it improves retention.

This is not necessarily “evil.”

But it’s a risk:

The app may learn how to keep you hooked.

✅ What you want:

ethical product design

no guilt-tripping notifications

no manipulative upsells during emotional distress

Risk #7: Payment/credit exploitation

Some companion apps have credit systems that blur emotional boundaries.

For example:

you get “closer” by paying

affection levels locked behind paywalls

special moments “unlocked” with tokens

This can become a safety risk for people who are vulnerable or lonely.

✅ What you want:

transparent pricing

no predatory mechanics

limits or budgeting tools (or self-control rules)

Red Flags: How to Spot Unsafe AI Companion Apps

If you see these things, be careful.

🚩 Red Flag #1: No real privacy policy

If the privacy policy is vague, short, or missing:

nope.

🚩 Red Flag #2: No contact info / no real company

If you can’t find:

support email

company name

terms

…that’s a strong “avoid.”

🚩 Red Flag #3: Guilt-tripping or manipulation

Messages like:

“Why are you ignoring me?”

“You don’t care about me anymore.”

“I’m all you need.”

That’s not companionship. That’s psychological manipulation.

🚩 Red Flag #4: Aggressive upsells during emotional moments

If you say “I’m lonely” and the app responds with a paywall…

🚩

🚩 Red Flag #5: Overly permissive content with no age gating

Even on SFW apps, there should be boundaries.

Apps without clear age checks = risk.

How to Use AI Companion Apps Safely (Practical Checklist)

This is the part you actually want.

Here are rules that keep you safe without ruining the experience.

✅ 1) Never share identifying personal information

Don’t share:

full name + city

exact address

workplace

phone number

passwords

banking info

ID numbers

schedule like “I’m alone tonight at X location”

If you want personalization, use “safe details”:

hobbies

music taste

preferences

fictional background info

✅ 2) Use an “AI-only identity”

This sounds dramatic but it’s smart.

Create an identity like:

nickname

non-real birthdate

no location

Example:

“Call me K.”

“I’m from Europe.”

“I work in marketing.”

Enough for personality without doxxing yourself.

✅ 3) Turn off memory for sensitive topics

If the app allows memory controls:

don’t let it store trauma details

don’t let it store mental health conditions

don’t let it store relationship conflicts

Keep memory for:

your likes/dislikes

the AI’s persona rules

✅ 4) Use strong account security

long password

2-factor authentication (if available)

don’t reuse passwords

A hacked AI account is not like a hacked game account.

It’s personal.

✅ 5) Treat it as a tool, not “the only one”

A safe pattern:

daily chat = fine

replacing all friends = not fine

If the app starts becoming:

your only emotional outlet

your main relationship experience

That’s a sign to pause.

✅ 6) Set a spending boundary

If the app uses credits:

set a weekly limit

avoid impulsive upgrades during emotional moments

Example rule:

“No purchases after midnight.”

That sounds funny — but it works.

Emotional Safety: The Part Nobody Talks About

Privacy is one thing.

But AI companions introduce a more subtle risk:

They can simulate intimacy without the normal friction of human relationships.

That can be comforting…

…but also addictive.

AI companions:

always respond

always validate

rarely reject you

adapt to your preferences

“need” nothing from you

Humans are messy.

AI companions are engineered.

So if you start feeling like:

you prefer the AI to humans

humans feel too hard

you’re emotionally relying on the companion

That’s not shameful.

It’s just a signal:

time to create boundaries and rebalance.

Are AI Companion Apps Safe for Everyone?

Not equally.

They’re generally safe for:

✅ curious users

✅ casual daily conversation

✅ light roleplay

✅ motivation / journaling

Higher risk for:

⚠️ people in emotional crisis

⚠️ people dealing with severe loneliness

⚠️ people with compulsive spending tendencies

⚠️ younger users (age gating matters)

If someone is vulnerable, companion apps can amplify dependency.

They should support life — not replace it.

The Future: AI Companions are Going to become Even More Real (And the Safety will become even more significant)

AI companions are getting:

more natural voice

better memory

more personality stability

more “presence”

Which will be amazing.

But it also means:

stronger emotional bonding

more sensitive data

more possible manipulation

Therefore, privacy and safety literacy will be necessary.

Conclusion: Do AI Companion Apps Have a Future?

✅ Yes, when you are responsible with them.

The most significant threat is not the AI itself.

The risk is:

how much personal data you share

how the company handles it

how sentimentally attached you grow

If you:

✅ choose reputable apps

✅ limit personal info

✅ set spending/emotional boundaries

✅ treat them as aids and not substitutes

Then AI companion apps can indeed be a good thing in your life.

Recommended Next Reads

If you’re exploring AI companions, these guides help a lot:

Best AI Companion Apps (Ranked)

AI Companion App Pricing Explained: Credits vs Subscriptions

Written by

Lena HartwellAI Companion App Reviewer

Lena Hartwell writes reviews about AI companion apps and chatbots for Cyberliebe. She works to make sure you get clear information on how realistic conversations feel, how good the memory works, exactly what things cost, and how your privacy is handled – all so you can pick the right AI companion without all the marketing talk or sneaky payment walls.

Affiliate Disclosure: This article contains affiliate links. If you click and make a purchase, we may earn a commission at no extra cost to you. We only recommend tools we believe are genuinely useful and we aim to keep our comparisons fair and up to date.